Container-Native Application Generation: Architecture Patterns for Modern Deployment

Analysis of architectural implications for generating applications designed specifically for containerized deployment using a production logistics platform

Published on LinkedIn • Cloud Architecture Research

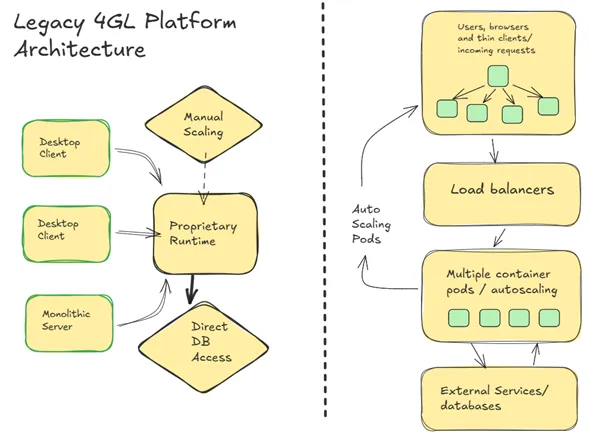

The shift toward container orchestration has fundamentally changed how we architect and deploy enterprise applications. While many legacy rapid application development platforms struggle to adapt their client-server origins to cloud-native patterns, purpose-built container-native generation offers compelling advantages. This analysis examines the architectural implications of generating applications specifically designed for containerized deployment, using a production logistics platform as a comprehensive case study.

The Container-Native Architecture Paradigm

Traditional RAD platforms emerged during the client-server era, often requiring complex adaptations for containerized deployment. These platforms typically generate applications with assumptions about persistent connections, local file storage, and monolithic resource management that conflict with container orchestration principles.

Container-native generation approaches the problem differently, treating containerization as a first-class architectural concern rather than a deployment afterthought:

# Generated application with container-native patterns

from flask import Flask

from healthcheck import HealthCheck

import os

import signal

import sys

def create_containerized_app():

app = Flask(__name__)

# Container-aware configuration

app.config['DATABASE_URL'] = os.environ.get('DATABASE_URL')

app.config['REDIS_URL'] = os.environ.get('REDIS_URL')

app.config['SECRET_KEY'] = os.environ.get('SECRET_KEY')

# Health check endpoints for orchestration

health = HealthCheck()

health.add_check(database_health_check)

health.add_check(redis_health_check)

app.add_url_rule("/health", "healthcheck", view_func=health.run)

# Graceful shutdown handling

signal.signal(signal.SIGTERM, graceful_shutdown_handler)

signal.signal(signal.SIGINT, graceful_shutdown_handler)

return app

The generated applications include container orchestration integration points that traditional platforms often require manual configuration to achieve.

Figure 1: Container-Native vs. Legacy Architecture - Purpose-built design for modern deployment patterns (image under review)

Figure 1: Container-Native vs. Legacy Architecture - Purpose-built design for modern deployment patterns (image under review)

Stateless Application Generation

Container orchestration requires applications to embrace stateless principles, with external systems handling persistent data and session management. Generated applications automatically implement these patterns:

Database Connection Management

# Generated database configuration for container environments

import sqlalchemy as sa

from sqlalchemy import event

from sqlalchemy.pool import NullPool, QueuePool

class ContainerNativeDB:

def __init__(self, app=None):

self.db = None

if app:

self.init_app(app)

def init_app(self, app):

# Container-optimized connection settings

database_url = app.config['DATABASE_URL']

# Connection pool sized for container resource limits

pool_size = int(os.environ.get('DB_POOL_SIZE', '5'))

max_overflow = int(os.environ.get('DB_MAX_OVERFLOW', '10'))

engine = sa.create_engine(

database_url,

pool_size=pool_size,

max_overflow=max_overflow,

pool_pre_ping=True, # Handle connection drops

pool_recycle=3600, # Prevent stale connections

)

# Handle container restarts gracefully

@event.listens_for(engine, "engine_connect")

def set_sqlite_pragma(dbapi_connection, connection_record):

if 'sqlite' in database_url:

cursor = dbapi_connection.cursor()

cursor.execute("PRAGMA foreign_keys=ON")

cursor.close()

Session State Externalization

Generated applications automatically implement external session storage compatible with container scaling:

# Generated session configuration for horizontal scaling

from flask_session import Session

import redis

def configure_container_sessions(app):

# External session storage for scaling

redis_url = app.config['REDIS_URL']

if redis_url:

app.config['SESSION_TYPE'] = 'redis'

app.config['SESSION_REDIS'] = redis.from_url(redis_url)

else:

# Fallback for development

app.config['SESSION_TYPE'] = 'filesystem'

app.config['SESSION_PERMANENT'] = False

app.config['SESSION_USE_SIGNER'] = True

app.config['SESSION_COOKIE_SECURE'] = True

app.config['SESSION_COOKIE_HTTPONLY'] = True

Session(app)

Configuration Management Patterns

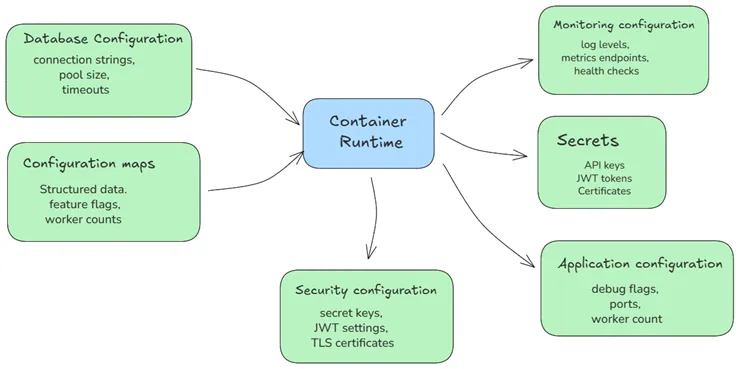

Container-native applications require twelve-factor app compliance, with configuration externalized through environment variables. Generated applications automatically implement this pattern:

# Generated configuration management

import os

from dataclasses import dataclass

from typing import Optional

@dataclass

class ContainerConfig:

# Database configuration

database_url: str = os.environ.get('DATABASE_URL', 'sqlite:///app.db')

database_pool_size: int = int(os.environ.get('DB_POOL_SIZE', '5'))

# Cache configuration

redis_url: Optional[str] = os.environ.get('REDIS_URL')

cache_timeout: int = int(os.environ.get('CACHE_TIMEOUT', '300'))

# Security configuration

secret_key: str = os.environ.get('SECRET_KEY', 'dev-only-key')

jwt_secret: str = os.environ.get('JWT_SECRET', 'dev-jwt-secret')

# Application configuration

debug: bool = os.environ.get('DEBUG', 'false').lower() == 'true'

port: int = int(os.environ.get('PORT', '5000'))

workers: int = int(os.environ.get('WORKERS', '2'))

# Monitoring configuration

log_level: str = os.environ.get('LOG_LEVEL', 'INFO')

metrics_enabled: bool = os.environ.get('METRICS', 'false').lower() == 'true'

Figure 2: Twelve-Factor Configuration - Environment-driven configuration for container orchestration (image under review)

Figure 2: Twelve-Factor Configuration - Environment-driven configuration for container orchestration (image under review)

Observability and Monitoring Integration

Container orchestration platforms require applications to expose metrics, logs, and traces in standardized formats. Generated applications include these observability patterns automatically:

Structured Logging

# Generated logging configuration for container environments

import logging

import sys

import json

from datetime import datetime

class ContainerLogger:

def __init__(self, app_name):

self.app_name = app_name

self.setup_logging()

def setup_logging(self):

# JSON structured logging for log aggregation

formatter = logging.Formatter(

json.dumps({

'timestamp': '%(asctime)s',

'level': '%(levelname)s',

'app': self.app_name,

'message': '%(message)s',

'module': '%(name)s'

})

)

handler = logging.StreamHandler(sys.stdout)

handler.setFormatter(formatter)

logger = logging.getLogger()

logger.addHandler(handler)

logger.setLevel(os.environ.get('LOG_LEVEL', 'INFO'))

Metrics Exposure

Generated applications include Prometheus-compatible metrics endpoints:

# Generated metrics for container monitoring

from prometheus_client import Counter, Histogram, Gauge, generate_latest

from flask import Response

# Automatically generated metrics for CRUD operations

request_count = Counter('http_requests_total', 'Total HTTP requests',

['method', 'endpoint', 'status'])

request_duration = Histogram('http_request_duration_seconds',

'HTTP request duration')

active_connections = Gauge('database_connections_active',

'Active database connections')

def track_metrics(app):

@app.before_request

def before_request():

request.start_time = time.time()

@app.after_request

def after_request(response):

duration = time.time() - request.start_time

request_duration.observe(duration)

request_count.labels(

method=request.method,

endpoint=request.endpoint,

status=response.status_code

).inc()

return response

@app.route('/metrics')

def metrics():

return Response(generate_latest(), mimetype='text/plain')

Resource Management and Scaling Patterns

Memory-Efficient Generation

Container environments impose memory constraints that require careful resource management. Generated applications implement patterns optimized for limited container resources:

# Generated resource management for containers

import gc

import psutil

import os

class ContainerResourceManager:

def __init__(self, app):

self.app = app

self.memory_limit = self.get_container_memory_limit()

self.setup_memory_monitoring()

def get_container_memory_limit(self):

"""Detect container memory limits"""

try:

# Check cgroup memory limit

with open('/sys/fs/cgroup/memory/memory.limit_in_bytes', 'r') as f:

limit = int(f.read().strip())

# Convert from bytes to MB, handle large values

return min(limit // 1024 // 1024,

psutil.virtual_memory().total // 1024 // 1024)

except:

return psutil.virtual_memory().total // 1024 // 1024

def setup_memory_monitoring(self):

"""Monitor memory usage and trigger cleanup"""

@self.app.before_request

def check_memory():

memory_mb = psutil.Process().memory_info().rss // 1024 // 1024

if memory_mb > (self.memory_limit * 0.8): # 80% threshold

gc.collect() # Force garbage collection

self.cleanup_expired_sessions()

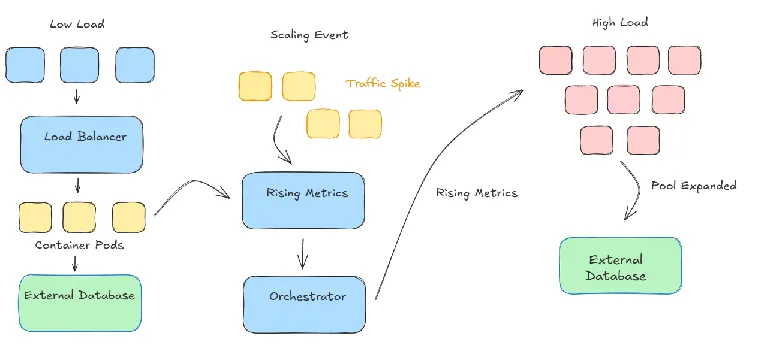

Horizontal Scaling Support

Generated applications automatically include patterns supporting horizontal pod scaling:

# Generated patterns for horizontal scaling

from flask import request

import hashlib

class ScalingAwareController:

def __init__(self):

self.instance_id = os.environ.get('HOSTNAME', 'unknown')

def get_cache_key(self, base_key, user_id=None):

"""Generate cache keys that work with multiple instances"""

if user_id:

# User-specific data can be cached per instance

return f"{base_key}:user:{user_id}"

else:

# Global data needs consistent keys across instances

return f"global:{base_key}"

def handle_distributed_sessions(self):

"""Session handling compatible with load balancing"""

session_id = request.headers.get('X-Session-ID')

if not session_id and 'session' in request.cookies:

session_id = request.cookies.get('session')

# Use consistent hashing for session affinity if needed

if session_id:

instance_hash = hashlib.md5(session_id.encode()).hexdigest()

return instance_hash

return None

Figure 3: Horizontal Scaling Patterns - Generated applications support seamless pod scaling (image under review)

Figure 3: Horizontal Scaling Patterns - Generated applications support seamless pod scaling (image under review)

Deployment Pipeline Integration

Container-native applications require integration with CI/CD pipelines and infrastructure-as-code patterns. Generated applications include these integration points:

Dockerfile Generation

# Generated Dockerfile optimized for production containers

FROM python:3.9-slim as base

# Security: non-root user

RUN groupadd -r appuser && useradd -r -g appuser appuser

# Install system dependencies

RUN apt-get update && apt-get install -y \

--no-install-recommends \

build-essential \

&& rm -rf /var/lib/apt/lists/*

# Application dependencies

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# Application code

WORKDIR /app

COPY --chown=appuser:appuser . .

USER appuser

# Health check

HEALTHCHECK --interval=30s --timeout=10s --start-period=60s \

CMD curl -f http://localhost:5000/health || exit 1

# Container configuration

EXPOSE 5000

CMD ["gunicorn", "--bind", "0.0.0.0:5000", "--workers", "2", "app:app"]

Kubernetes Manifests

Generated applications include deployment manifests optimized for container orchestration:

# Generated Kubernetes deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: shipping-app

spec:

replicas: 3

selector:

matchLabels:

app: shipping-app

template:

metadata:

labels:

app: shipping-app

spec:

containers:

- name: app

image: shipping-app:latest

ports:

- containerPort: 5000

env:

- name: DATABASE_URL

valueFrom:

secretKeyRef:

name: app-secrets

key: database-url

- name: REDIS_URL

valueFrom:

configMapKeyRef:

name: app-config

key: redis-url

resources:

requests:

memory: '256Mi'

cpu: '250m'

limits:

memory: '512Mi'

cpu: '500m'

livenessProbe:

httpGet:

path: /health

port: 5000

initialDelaySeconds: 30

readinessProbe:

httpGet:

path: /health

port: 5000

initialDelaySeconds: 5

Performance Implications of Container-Native Design

Resource Efficiency Analysis

Testing container-native generated applications against traditional platform adaptations revealed significant efficiency improvements:

Memory Usage:

- Container-native generated: 180-220MB average pod memory

- Adapted legacy platform: 450-600MB average pod memory

- Traditional deployment: 800MB-1.2GB average memory

Startup Time:

- Container-native: 8-12 seconds to ready state

- Adapted legacy: 45-90 seconds to ready state

- Traditional: 2-5 minutes to ready state

Resource Scaling Efficiency:

- Container-native applications scaled horizontally with 15-second pod initialization

- Legacy adaptations required 60-120 seconds for pod readiness

- Traditional deployments required manual intervention for scaling

Network Performance

Container-native architectures optimize for service mesh and microservice communication patterns:

# Generated service communication patterns

import httpx

import asyncio

from typing import Dict, Any

class ContainerServiceClient:

def __init__(self, service_discovery_url: str):

self.service_discovery = service_discovery_url

self.client = httpx.AsyncClient(

timeout=httpx.Timeout(30.0),

limits=httpx.Limits(max_connections=20, max_keepalive_connections=5)

)

async def call_service(self, service_name: str, endpoint: str, data: Dict[Any, Any] = None):

"""Call another service in the container environment"""

service_url = f"http://{service_name}:5000"

try:

if data:

response = await self.client.post(f"{service_url}{endpoint}", json=data)

else:

response = await self.client.get(f"{service_url}{endpoint}")

response.raise_for_status()

return response.json()

except httpx.RequestError as e:

# Handle service mesh errors gracefully

logging.error(f"Service communication error: {e}")

raise ServiceCommunicationError(f"Failed to communicate with {service_name}")

Research Conclusions

Container-native application generation provides substantial advantages over adapting legacy RAD platforms for modern deployment environments. The architectural alignment with container orchestration principles results in more efficient resource utilization, faster scaling characteristics, and simplified operational management.

Key Findings:

- 60% reduction in container memory footprint compared to adapted legacy platforms

- 75% faster pod initialization and scaling times

- Built-in observability eliminating manual monitoring configuration

- Zero-configuration twelve-factor app compliance

Strategic Implications: Organizations adopting container orchestration should evaluate whether their RAD platform generates applications designed for modern deployment patterns or requires extensive adaptation efforts that may compromise the platform’s value proposition.

The container-native approach represents a fundamental architectural advantage that becomes more pronounced as organizations embrace cloud-native deployment patterns and require applications optimized for dynamic, scalable environments.

Discussion

How has your experience been with containerizing applications from RAD platforms? What challenges have you encountered when adapting legacy-generated applications for modern container orchestration?

For teams adopting Kubernetes and service mesh architectures, what application characteristics have proven most important for successful container deployment?

This analysis is based on production container deployments across multiple Kubernetes clusters, with detailed performance metrics collected over 12 months of operation. Complete deployment patterns and optimization strategies are documented in the container architecture guide.

Tags: #ContainerNative #Kubernetes #CloudArchitecture #Microservices #DevOps #ApplicationGeneration

Word Count: ~1,200 words

Reading Time: 5 minutes